Model Context Protocol: The Future of the Internet?

Explore how the new Model Context Protocol (MCP) connects AI directly to real data, promising a more reliable, interactive internet. Learn what MCP is, why it matters, and see practical steps for adding MCP support to a Laravel project.

Why MCP Could Change Everything

Some time ago, I discovered something called MCP (Model Context Protocol). It’s a new protocol developed by Anthropic, and it might just be the missing piece in the AI puzzle - the one that finally lets us build AI-powered applications that are both powerful and reliable.

So, what exactly is MCP? In simple terms, MCP is a standardized way for AI models (like large language models—LLMs) to connect directly to real, live data and services. Instead of LLMs guessing or hallucinating answers based on their training data, they can now fetch fresh, factual information straight from the source.

Here’s why I think this could change the internet as we know it: For years, the main problem with LLMs was never their intelligence, but their reliability. You could ask ChatGPT or Claude something, and you’d never be quite sure if the answer was real or just a very convincing hallucination. With MCP, you get fundamental, raw data straight from the source, in a format the AI can understand and reliably work with.

Imagine a new internet where LLMs can connect to MCP servers everywhere. You want to buy a T-shirt? Just connect your LLM to the e-shop’s MCP endpoint and say: “Show me all the green T-shirts.” The LLM fetches the data, filters it, and displays only the relevant products—no guessing, no hallucination. Like one? Just say: “Order the California T-shirt for me,” and it will handle the checkout, error-free.

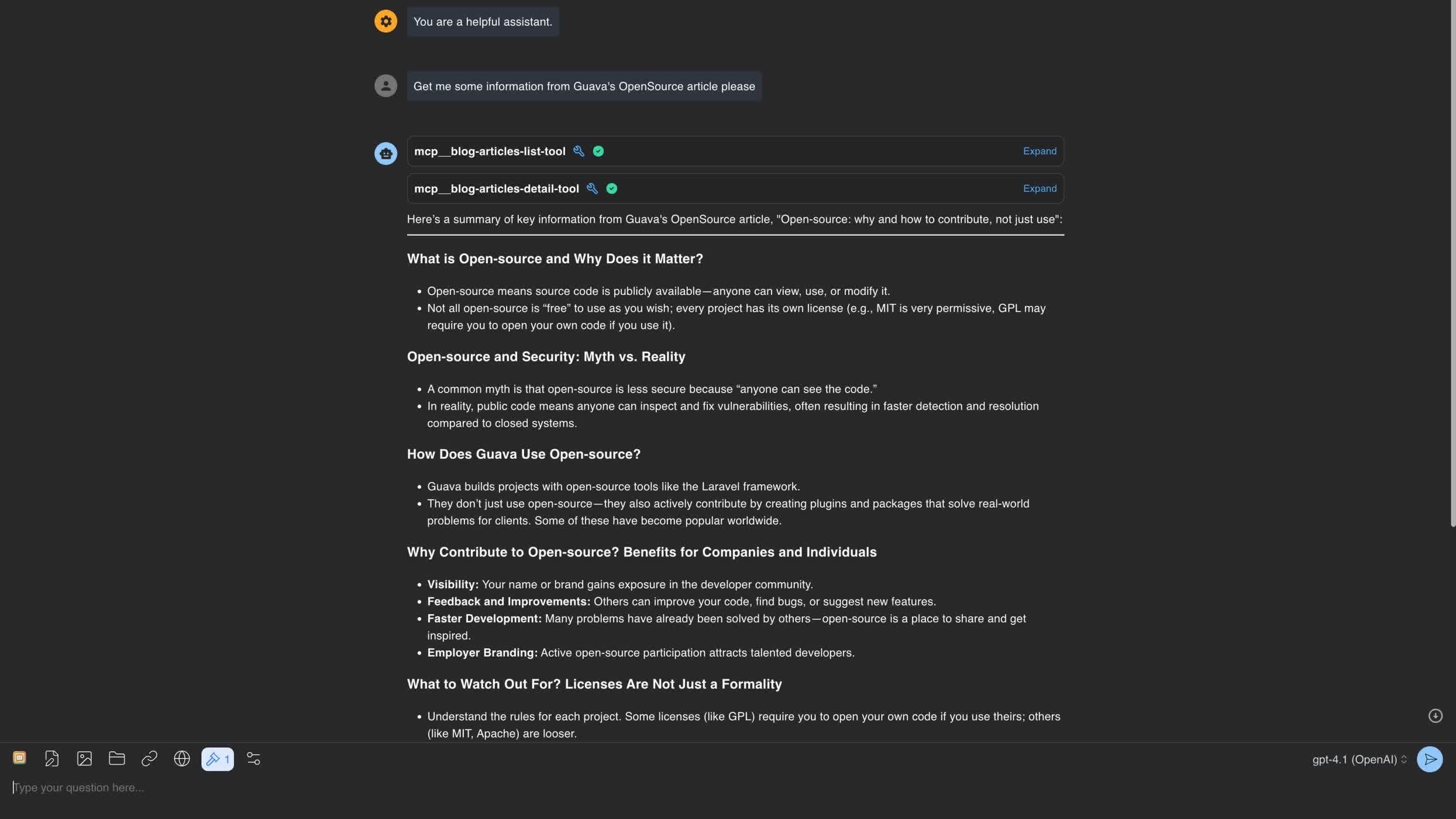

We wanted to see this in action, so we implemented MCP on our own Guava website. Now, you can ask our site what Laravel version it’s running, list all our blog articles, or even request detailed information about any article, all through an LLM interface. Check out the examples below.

It’s early days - MCP was only released a few months ago. Right now, most LLM clients (like OpenAI’s ChatGPT) only support a subset of MCP features, mainly “tools.” MCP also supports resources, prompts, and resource templates, but for now, ChatGPT is limited to tools. Still, even with just tools, you can do a lot. The future looks bright, and as client support grows, the possibilities are endless.

Part 2: How We Integrated MCP Into Guava (for Developers)

Sadly, there’s no official Laravel SDK for MCP yet. But the ecosystem is moving fast, and there are already a few promising packages out there. After some research, I chose to use opgginc/laravel-mcp-server.

Quick Start: Installing MCP Server in Laravel

Here’s a mini-tutorial for adding MCP support to your Laravel project:

Install the package:

composer require opgginc/laravel-mcp-serverPublish the config:

php artisan vendor:publish --provider="OPGG\LaravelMcpServer\LaravelMcpServerServiceProvider"Configure your MCP endpoints:

Define your tools (e.g., to expose your blog articles, Laravel version, etc.).

Add them to the published config file.

Optionally, you can also create resource endpoints and resource templates.

Test your MCP implementation:

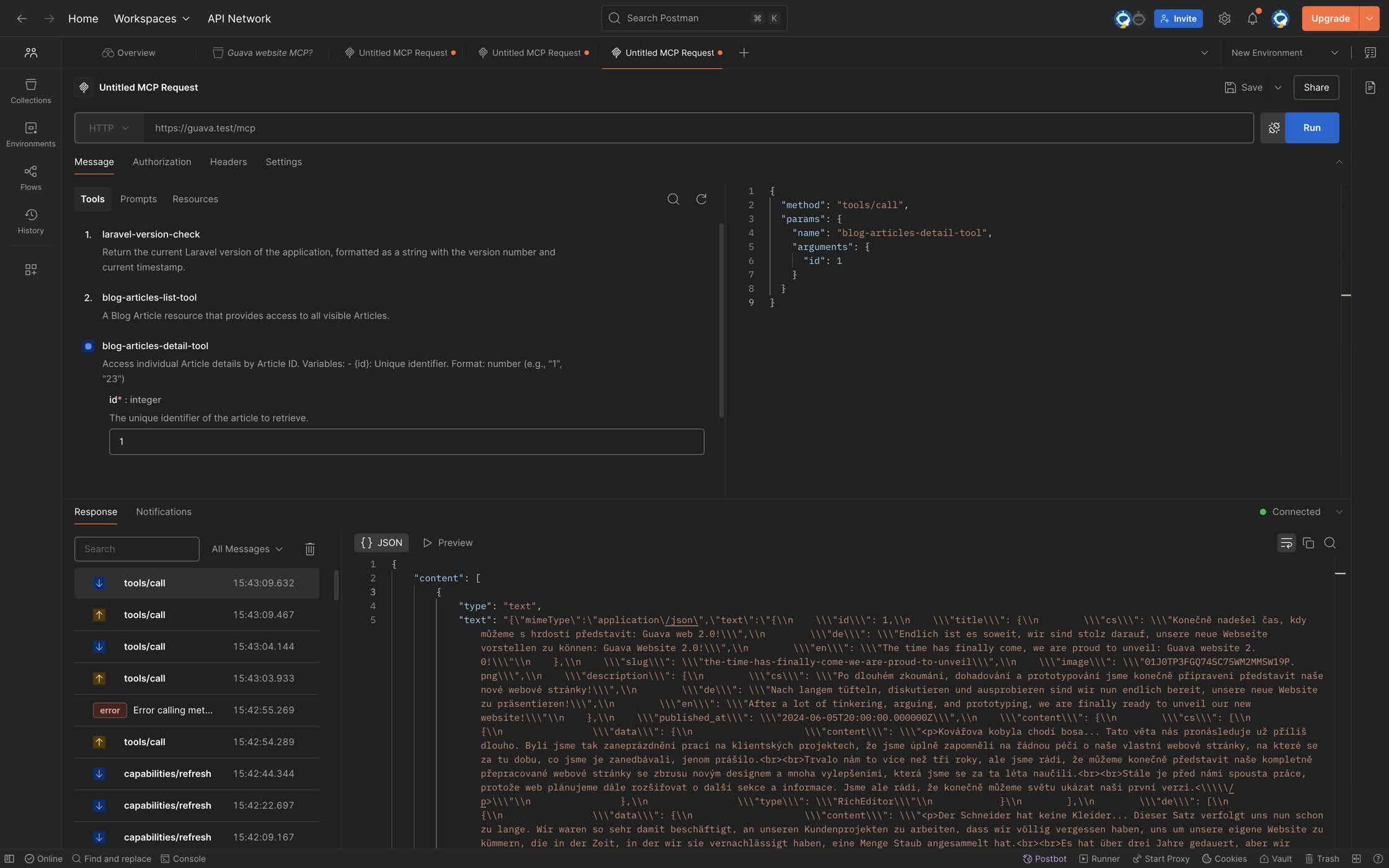

I did not find a client that supports everything yet, but you can use tools like Postman to validate your endpoints.

Alternatively, check out the MCP Inspector, an official handy tool for validating your MCP endpoints.

I created a resource for our blog articles and validated its functionality using Postman. This let me see exactly what the LLM would get when making a call to our MCP server.

Testing MCP in Production

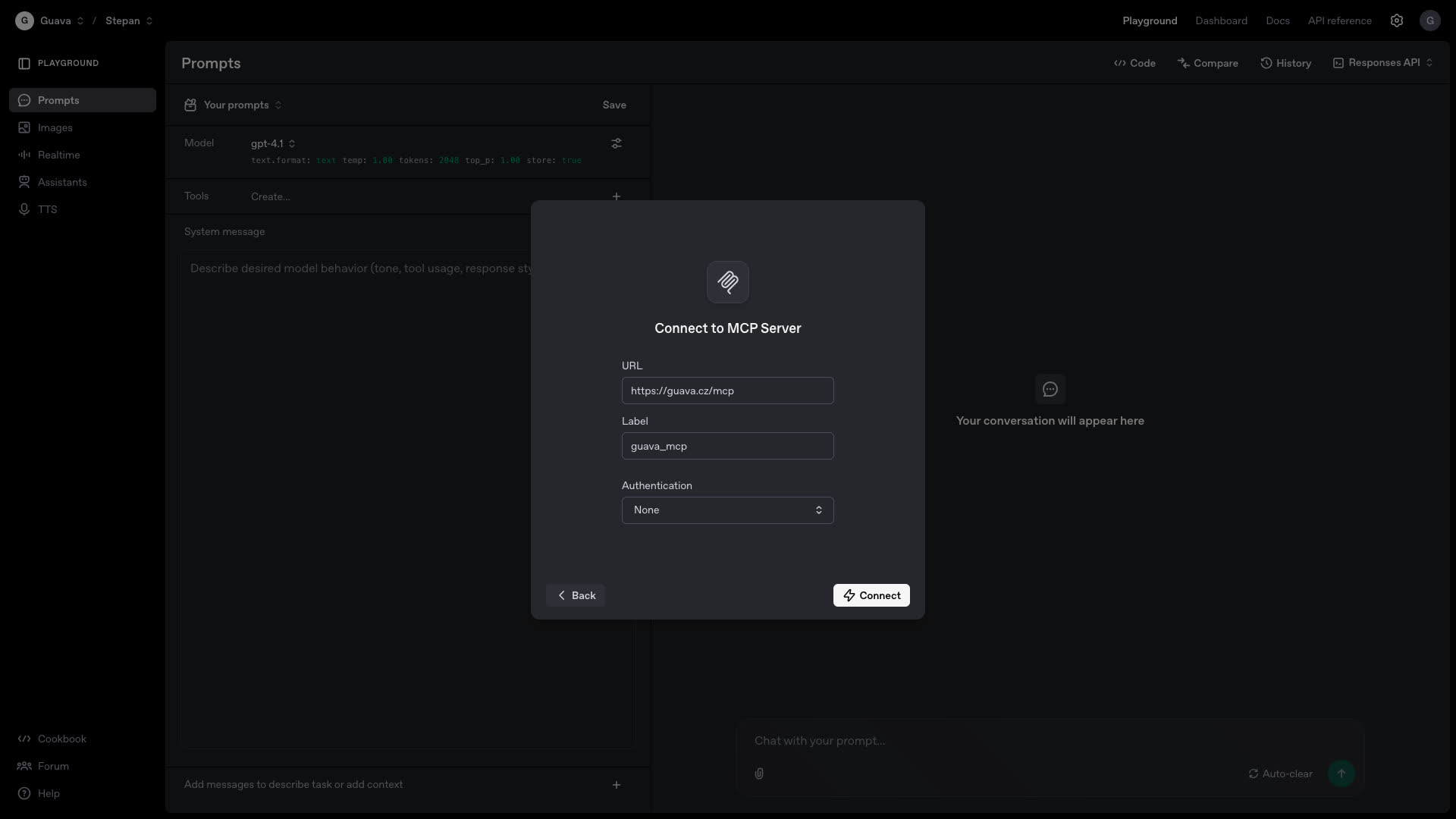

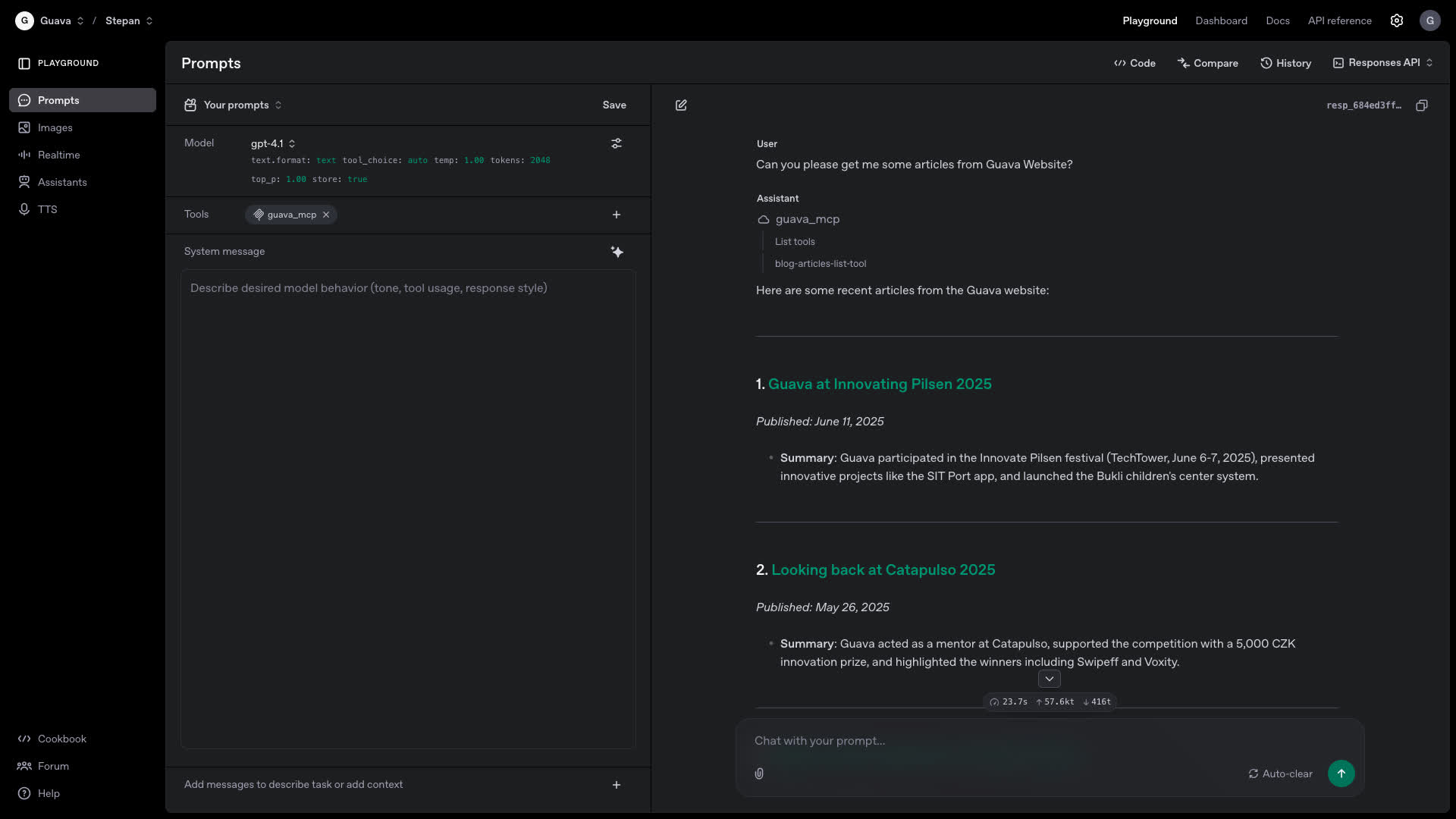

Want to see it live? Here’s how you can test your MCP server with OpenAI:

Open OpenAI Platform Playground and select Prompts

Select your LLM model.

Create a new tool, and connect it to your MCP server endpoint.

Now, ask the LLM something like “List all blog articles,” or “What Laravel version are you running?” The LLM will automatically call the right tool, fetch the data from your server, and give you a real, up-to-date answer.

Isn’t that the future of the internet? AI agents that don’t just guess, but "they know".

Want to learn more or see a live demo? Try out the MCP endpoints on our site (https://guava.cz/mcp), or drop us a line. The future is closer than you think.